Large Language Models (LLMs) like GPT and LLaMA are revolutionizing how we interact with technology. But unleashing their full potential requires serious computing power, especially when it comes to GPU memory. Knowing how to estimate this need is crucial, both for acing interviews and for deploying these models effectively in the real world.

The Magic Formula

Here's a simple formula to calculate the GPU memory (M) required for serving an LLM:

M (GB) = (P 4B / Q) 1.2

Let's break it down:

P: Number of parameters in the model (e.g., 70 billion for a 70B LLaMA model)

4B: Bytes per parameter (usually 4 bytes for 32-bit precision)

Q: Bits used for loading the model (16-bit or 32-bit)

1.2: Overhead multiplier (20% extra for smooth operation)

Example: Taming a 70 Billion Parameter Beast

Let's say you want to deploy a 70B parameter LLaMA model with 16-bit precision:

M (GB) = (70,000,000,000 4 / 16) 1.2 = 210 GB

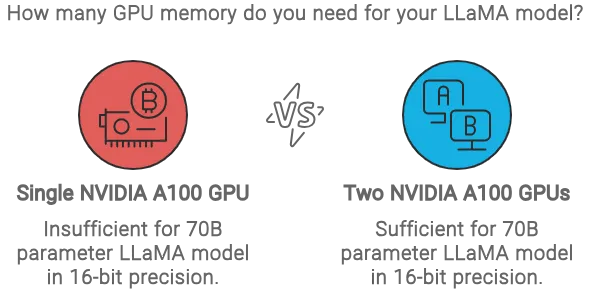

This means you'd need a whopping 210GB of GPU memory! Even a powerful NVIDIA A100 GPU with 80GB of memory wouldn't cut it alone; you'd need multiple GPUs to handle this massive model.

Why This Matters

Interview Success: This question pops up frequently in LLM interviews. Mastering this formula shows you understand the practical aspects of deploying these models.

Avoiding Bottlenecks: Underestimating GPU memory requirements can lead to sluggish performance and even crashes. This formula helps you choose the right hardware for your needs.

Cost Optimization: GPUs are expensive! Accurately estimating memory requirements prevents overspending on unnecessary hardware.

Beyond the Basics

This formula provides a solid foundation, but remember that other factors can influence memory usage, such as batch size and inference techniques.

Mastering LLM Deployment

Understanding GPU memory requirements is just one piece of the puzzle. To truly master LLM deployment, explore advanced techniques like model parallelism, quantization, and efficient inference engines.

By combining this knowledge with the memory estimation formula, you'll be well on your way to deploying and scaling LLMs effectively.